43 machine learning noisy labels

github.com › Advances-in-Label-Noise-LearningGitHub - weijiaheng/Advances-in-Label-Noise-Learning: A ... Jun 15, 2022 · Learning from Noisy Labels via Dynamic Loss Thresholding. Evaluating Multi-label Classifiers with Noisy Labels. Self-Supervised Noisy Label Learning for Source-Free Unsupervised Domain Adaptation. Transform consistency for learning with noisy labels. Learning to Combat Noisy Labels via Classification Margins. Meta-learning from noisy labels :: Päpper's Machine Learning Blog ... Label noise introduction Training machine learning models requires a lot of data. Often, it is quite costly to obtain sufficient data for your problem. Sometimes, you might even need domain experts which don't have much time and are expensive. One option that you can look into is getting cheaper, lower quality data, i.e. have less experienced people annotate data. This usually has the ...

› machine-learning-algorithmMachine Learning Algorithm - an overview | ScienceDirect Topics Machine Learning Algorithm. An ML algorithm, which is a part of AI, uses an assortment of accurate, probabilistic, and upgraded techniques that empower computers to pick up from the past point of reference and perceive hard-to-perceive patterns from massive, noisy, or complex datasets.

Machine learning noisy labels

› Become-a-Machine-Learning-EngineerHow to Become a Machine Learning Engineer: 15 Steps - wikiHow Mar 05, 2021 · Machine Learning from Stanford, an introductory class focused on breaking down complex concepts related to the field. Learning from Data from Caltech, an introductory class focused on mathematical theory and algorithmic application. Practical Machine Learning from Johns Hopkins University, a class focused on data prediction. How noisy is your dataset? Sample and weight training samples to ... Second, the label noisy stands for a dataset crawled (for example, by icrawler using keywords) ... When training a machine learning model, due to the limited capacity of computer memory, the set ... Data Noise and Label Noise in Machine Learning This type of label noise reflects a general insecurity in labelling and is with small α relatively easy to overcome [5]. 2 — Own image: symmetric label noise Asymmetric Label Noise All Labels Randomly chosen α% of all labels i are switched to label i + 1, or to 0 for maximum i (see Figure 3).

Machine learning noisy labels. – Toronto Machine Learning His work on Multitask Learning helped create interest in a subfield of machine learning called Transfer Learning. Rich received an NSF CAREER Award in 2004 (for Meta Clustering), best paper awards in 2005 (with Alex Niculescu-Mizil), 2007 (with Daria Sorokina), and 2014 (with Todd Kulesza, Saleema Amershi, Danyel Fisher, and Denis Charles), and ... Noisy Labels: Theoretical Approaches/Empirical Studies We demonstrate that several proposed learning-with-noisy-labels solutions in the literature relate closely to negative label smoothing (NLS), which defines as using a negative weight to combine the hard and soft labels. We unify (positive) LS and NLS into GLS, and provide understandings for the properties of GLS when learning with noisy labels. PDF Cost-Sensitive Learning with Noisy Labels Keywords: class-conditional label noise, statistical consistency, cost-sensitive learning 1. Introduction Learning from noisy training data is a problem of theoretical as well as practical interest in machine learning. In many applications such as learning to classify images, it is often the case that the labels are noisy. Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

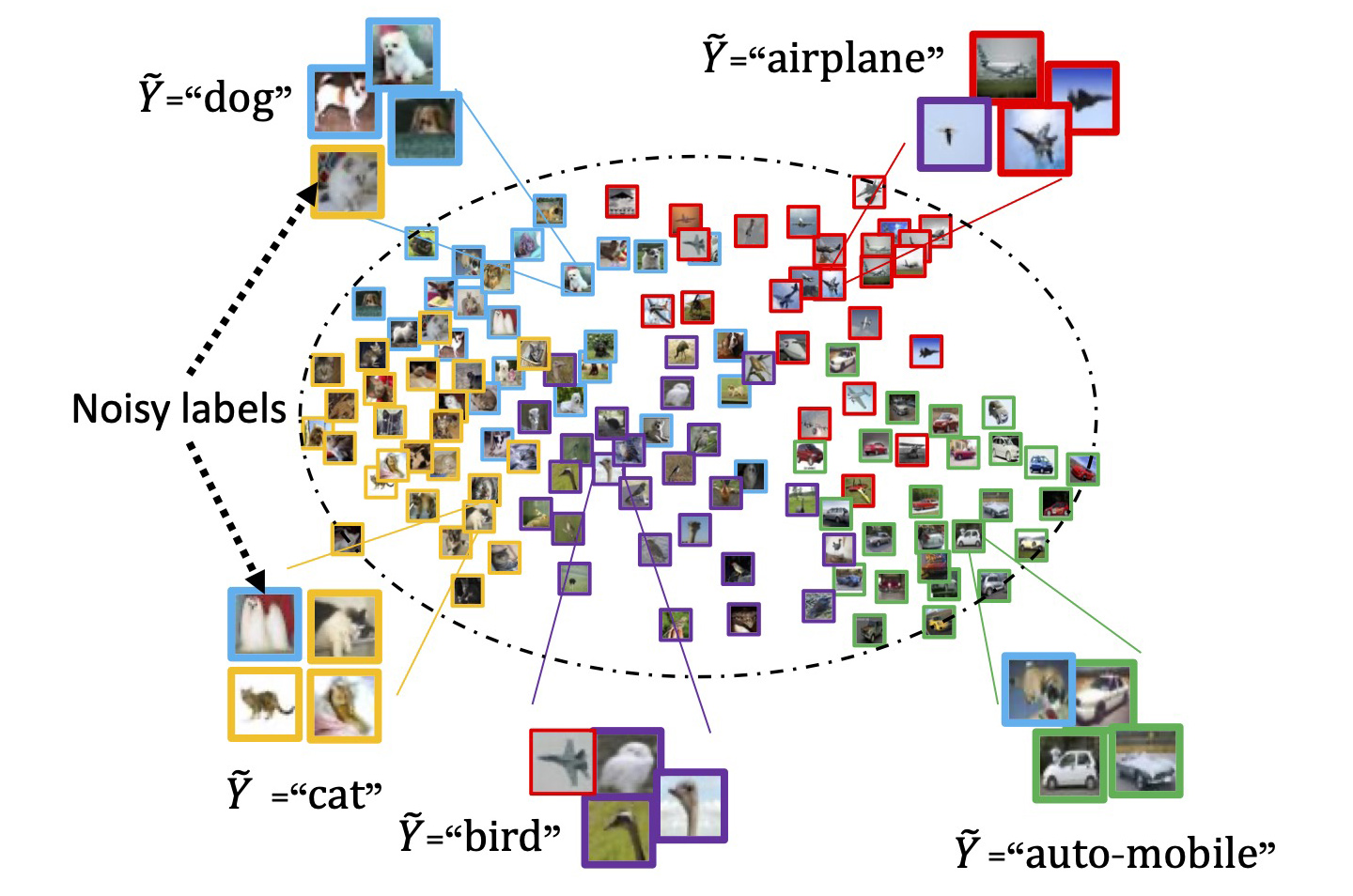

Learning from Noisy Labels with Deep Neural Networks: A Survey As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels (robust training) is becoming an important task in modern deep learning applications. In this survey, we first describe the problem of learning with label noise from a supervised learning perspective. Event-Driven Architecture Can Clean Up Your Noisy Machine Learning Labels Machine learning requires a data input to make decisions. When talking about supervised machine learning, one of the most important elements of that data is its labels . In Riskified's case, the ... Title: To Aggregate or Not? Learning with Separate Noisy Labels Abstract: The rawly collected training data often comes with separate noisy labels collected from multiple imperfect annotators (e.g., via crowdsourcing). Typically one would first aggregate the separate noisy labels into one and apply standard training methods. The literature has also studied extensively on effective aggregation approaches. How Noisy Labels Impact Machine Learning Models - KDnuggets While this study demonstrates that ML systems have a basic ability to handle mislabeling, many practical applications of ML are faced with complications that make label noise more of a problem. These complications include: Not being able to create very large training sets, and Systematic labeling errors that confuse machine learning.

Understanding and Utilizing Deep Neural Networks Trained with Noisy Labels Noisy labels are ubiquitous in real-world datasets, which poses a challenge for robustly training deep neural networks (DNNs) as DNNs usually have the high capacity to memorize the noisy labels. In this paper, we find that the test accuracy can be quantitatively characterized in terms of the noise ratio in datasets. How to handle noisy labels for robust learning from uncertainty Most deep neural networks (DNNs) are trained with large amounts of noisy labels when they are applied. As DNNs have the high capacity to fit any noisy labels, it is known to be difficult to train DNNs robustly with noisy labels. These noisy labels cause the performance degradation of DNNs due to the memorization effect by over-fitting. › ~hertzman › 411notesMachine Learning and Data Mining Lecture Notes 1.1 Types of Machine Learning Some of the main types of machine learning are: 1. Supervised Learning, in which the training data is labeled with the correct answers, e.g., “spam” or “ham.” The two most common types of supervised lear ning are classification (where the outputs are discrete labels, as in spam filtering) and regression ... github.com › cleanlab › cleanlabGitHub - cleanlab/cleanlab: The standard data-centric AI ... # Generate noisy labels using the noise_marix. Guarantees exact amount of noise in labels. from cleanlab. benchmarking. noise_generation import generate_noisy_labels s_noisy_labels = generate_noisy_labels (y_hidden_actual_labels, noise_matrix) # This package is a full of other useful methods for learning with noisy labels.

How Noisy Labels Impact Machine Learning Models | iMerit Supervised Machine Learning requires labeled training data, and large ML systems need large amounts of training data. Labeling training data is resource intensive, and while techniques such as crowd sourcing and web scraping can help, they can be error-prone, adding 'label noise' to training sets.

How to Improve Deep Learning Model Robustness by Adding Noise 4. # import noise layer. from keras.layers import GaussianNoise. # define noise layer. layer = GaussianNoise(0.1) The output of the layer will have the same shape as the input, with the only modification being the addition of noise to the values.

Using Noisy Labels to Train Deep Learning Models on Satellite Imagery Experimenting with noisy labels In order to measure the relationship between label noise and model accuracy, we needed a way to vary the amount of label noise, while keeping other variables constant. To do this, we took an off-the-shelf dataset, and systematically introduced errors into the labels.

Dealing with noisy training labels in text ... - Stack Overflow Works with sklearn/pyTorch/Tensorflow/FastText/etc. lnl = LearningWithNoisyLabels (clf=LogisticRegression ()) lnl.fit (X = X_train_data, s = train_noisy_labels) # Estimate the predictions you would have gotten by training with *no* label errors. predicted_test_labels = lnl.predict (X_test)

Noisy Labels in Remote Sensing Annotating RS images with multi-labels at large-scale to drive DL studies is time consuming, complex, and costly in operational scenarios. To address this issue, existing thematic products (e.g., Corine Land-Cover map) can be used, however the land-use and land-cover labels through these products can be incomplete and noisy. Handling data with incomplete and noisy labels may result in ...

subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2018-WACV - A semi-supervised two-stage approach to learning from noisy labels. [Paper] 2018-NIPS - Co-teaching: Robust Training of Deep Neural Networks with Extremely Noisy Labels. [Paper] [Code] 2018-NIPS - Masking: A New Perspective of Noisy Supervision. [Paper] [Code]

machine learning - Classification with noisy labels? - Cross Validated Let p t be a vector of class probabilities produced by the neural network and ℓ ( y t, p t) be the cross-entropy loss for label y t. To explicitly take into account the assumption that 30% of the labels are noise (assumed to be uniformly random), we could change our model to produce p ~ t = 0.3 / N + 0.7 p t instead and optimize

[P] Noisy Labels and Label Smoothing : MachineLearning - reddit My best guess that this 'label smoothing' thing isn't going to change the optimal classification boundary at all (in a maximum-likelihood sense) if the "smoothing" is symmetrical wrt. the labels, and even the non-symmetric case can be addressed in a rather more straightforward way, simply by adjusting the weight of more "uncertain" points in the dataset.

PDF Learning with Noisy Labels - NeurIPS Noisy labels are denoted by ˜y. Let f: X→Rbe some real-valued decision function. Therisk of fw.r.t. the 0-1 loss is given by RD(f) = E (X,Y )∼D 1{sign(f(X))6= Y } The optimal decision function (called Bayes optimal) that minimizes RDover all real-valued decision functions is given byf⋆(x) = sign(η(x) −1/2) where η(x) = P(Y = 1|x).

PDF Learning with Noisy Labels - Carnegie Mellon University Noisy labels are denoted by ˜y. Let f: X→Rbe some real-valued decision function. Therisk of fw.r.t. the 0-1 loss is given by RD(f) = E (X,Y )∼D 1{sign(f(X))6= Y } The optimal decision function (called Bayes optimal) that minimizes RDover all real-valued decision functions is given byf⋆(x) = sign(η(x) −1/2) where η(x) = P(Y = 1|x).

Deep learning with noisy labels: Exploring techniques and remedies in ... Deep learning with noisy labels: Exploring techniques and remedies in medical image analysis Abstract Supervised training of deep learning models requires large labeled datasets. There is a growing interest in obtaining such datasets for medical image analysis applications. However, the impact of label noise has not received sufficient attention.

On Learning Contrastive Representations for Learning with Noisy Labels Deep neural networks are able to memorize noisy labels easily with a softmax cross-entropy (CE) loss. Previous studies attempted to address this issue focus on incorporating a noise-robust loss function to the CE loss. However, the memorization issue is alleviated but still remains due to the non-robust CE loss.

en.wikipedia.org › wiki › Machine_learningMachine learning - Wikipedia In weakly supervised learning, the training labels are noisy, limited, or imprecise; ... Embedded Machine Learning is a sub-field of machine learning, ...

An Introduction to Classification Using Mislabeled Data Figure 1: Impact of 30% label noise on LinearSVC 1. Label noise can significantly harm performance: Noise in a dataset can mainly be of two types: feature noise and label noise; and several research papers have pointed out that label noise usually is a lot more harmful than feature noise.

PDF Meta Label Correction for Noisy Label Learning Labeled data largely determines whether a machine learn- ing system can perform well on a task or not, as noisy la- bel or corrupted labels could cause dramatic performance drop (Nettleton, Orriols-Puig, and Fornells 2010). The prob- lem gets even worse when an adversarial rival intentionally injects noises into the labels (Reed et al. 2014).

Learning Soft Labels via Meta Learning - Apple Machine Learning Research When applied to dataset containing noisy labels, the learned labels correct the annotation mistakes, and improves over state-of-the-art by a significant margin. Finally, we show that learned labels capture semantic relationship between classes, and thereby improve teacher models for the downstream task of distillation.

What Are Features And Labels In Machine Learning? | Codeing School ~ Codeing School - Learn Code ...

Data Noise and Label Noise in Machine Learning This type of label noise reflects a general insecurity in labelling and is with small α relatively easy to overcome [5]. 2 — Own image: symmetric label noise Asymmetric Label Noise All Labels Randomly chosen α% of all labels i are switched to label i + 1, or to 0 for maximum i (see Figure 3).

How noisy is your dataset? Sample and weight training samples to ... Second, the label noisy stands for a dataset crawled (for example, by icrawler using keywords) ... When training a machine learning model, due to the limited capacity of computer memory, the set ...

Post a Comment for "43 machine learning noisy labels"